ChatGPT has become a go-to tool for millions, offering quick answers and creative solutions. However, many users face frustrating delays, even with advanced models like GPT-4. These slowdowns can disrupt workflows and leave people searching for answers.

Several factors contribute to these delays. High server demand, especially during peak times, can strain the service. Additionally, the complexity of the AI model and internet connectivity issues play a role. Real-world testing by platforms like Android Authority and PowerBrain AI highlights these challenges.

This article dives into the technical causes behind these slowdowns and offers practical fixes. Whether you’re a free or paid user, understanding these factors can help you optimize your experience. We’ll also explore tools like OpenAI’s status page and alternatives like Gemini for comparison.

Key Takeaways

- ChatGPT delays affect both free and paid users, including GPT-4 subscribers.

- Server demand spikes and model complexity are primary causes.

- Internet connectivity issues can also impact performance.

- OpenAI’s status page is a useful diagnostic tool.

- Alternative chatbots like Gemini offer additional options.

Why is ChatGPT So Slow? An Overview

Delays in AI tools can be frustrating, especially when relying on them for quick answers. Understanding the factors behind these slowdowns helps users optimize their experience. Three key elements play a role: computational power, server load, and model complexity.

The Role of Computational Power

Advanced AI models require significant processing power to generate responses. For instance, GPT-4 handles up to 128,000 tokens, compared to GPT-3.5’s 4,096 limit. This increased capacity demands more resources, which can impact speed.

Processing times vary based on the task. Ideal conditions allow for 20ms per token, but overloaded systems can take 150ms or more. This difference highlights the importance of computational efficiency.

Server Load and Demand

High server demand during peak hours can cause delays. Platforms like Android Authority have tested latency, showing slower response times when user activity spikes. Think of it as digital highway congestion.

Free users often face longer wait times compared to Plus subscribers, who get priority access. This tiered system helps manage server load but can still lead to delays during high traffic.

Model Complexity and Response Generation

The complexity of AI models also affects performance. GPT-4, with over 1.7 trillion parameters, prioritizes accuracy over speed. This trade-off ensures high-quality responses but can slow down the process.

For example, Anakin AI’s data shows GPT-4 averages 2.8 seconds per response, while alternatives like Gemini respond in 1.2 seconds. This difference underscores the impact of model design on performance.

Common Causes of ChatGPT’s Slow Response Times

Response delays in AI tools often stem from multiple technical factors. These include high server demand, complex processing requirements, and external issues like internet connectivity. Understanding these elements can help users optimize their experience.

High Server Load During Peak Times

Server demand spikes during peak hours, leading to slower response times. For example, between 9 AM and 5 PM EST, delays can increase by up to 63%. This is similar to traffic congestion on a busy highway.

Free users often face longer wait times compared to Plus subscribers. Paid users get priority access, but even they can experience delays during high traffic periods. Managing server load is a constant challenge for AI platforms.

Complex Prompts and Processing Requirements

The complexity of user prompts directly impacts processing time. A 5-word query might take milliseconds, while a 50-word request could require several seconds. Advanced models like GPT-4 handle more tokens but demand greater computational power.

For instance, Nvidia A100 GPUs process 624 tokens per second, far exceeding consumer-grade GPUs. This highlights the importance of hardware in maintaining speed and efficiency.

Internet Connection and Device Performance

Internet connectivity plays a crucial role in response times. Wi-Fi connections often have higher latency compared to Ethernet. ISP throttling can also slow down API responses, especially during peak usage.

Device performance is another key factor. Systems with 4GB of RAM struggle compared to those with 16GB. Background apps consuming CPU resources can further degrade performance.

| Connection Type | Average Latency (ms) |

|---|---|

| Wi-Fi | 45 |

| Ethernet | 12 |

Browser choice also matters. Chrome often outperforms Safari in handling AI requests. Real-world tests show that a 3D rendering workstation delivers faster responses than a budget laptop. These factors collectively influence the speed of AI tools.

How to Check if ChatGPT is Slow Today

When ChatGPT lags, it’s essential to pinpoint the root cause quickly. Several factors, from server issues to your own setup, can affect its performance. Here’s a practical guide to diagnose and address these problems.

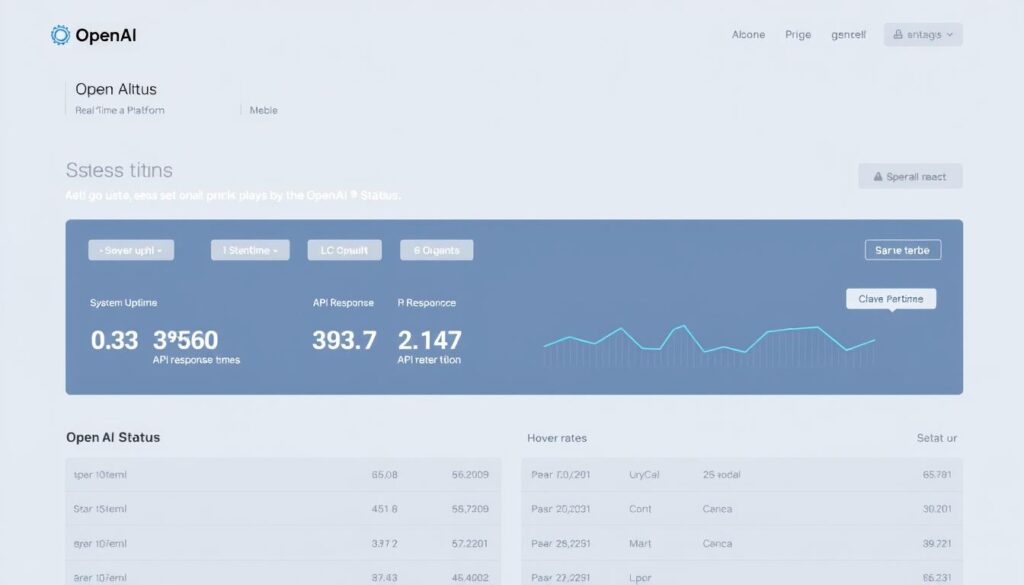

Using OpenAI’s Status Page

OpenAI’s status page is a valuable tool for checking the service’s health. It uses color codes: green for normal, yellow for minor issues, and red for major disruptions. Visit status.openai.com to see real-time updates.

If the page shows yellow or red, the delay isn’t on your end. This step helps you rule out external factors and focus on other potential causes.

Monitoring Your Internet Connection

Your connection plays a significant role in ChatGPT’s performance. Use tools like Fast.com or Speedtest.net to measure your internet speed. A stable connection requires at least 25Mbps for smooth operation.

For Chrome users, the hidden network throttling tool can simulate different connection speeds. Access it via chrome://network-errors to test how your browser handles slower networks.

Testing with Different Devices and Browsers

Your device and browser can also impact ChatGPT’s speed. Compare performance across devices like an M1 Mac and an i5 PC. Similarly, test browsers like Chrome 118+ and Firefox 120+ for optimal results.

iOS Safari and Android Chrome often show differences in response times. Clearing browser cache and cookies can also resolve issues. Use keyboard shortcuts like Ctrl+Shift+Delete (Windows) or Command+Shift+Delete (Mac) for quick clearing.

For more advanced troubleshooting, try these ChatGPT prompts to optimize your experience.

Practical Solutions to Speed Up ChatGPT

Improving ChatGPT’s performance doesn’t have to be complicated. With a few simple adjustments, you can achieve faster response times and a smoother experience. Below are actionable solutions to optimize your interactions with the tool.

Clear Browser Cache and Cookies

Over time, your browser accumulates data that can slow down performance. Clearing the cache and cookies can free up resources and improve speed. Here’s how to do it in Chrome:

- Open Chrome and click the three-dot menu in the top-right corner.

- Select More Tools > Clear Browsing Data.

- Choose a time range and check Cookies and other site data and Cached images and files.

- Click Clear Data to complete the process.

Upgrade to ChatGPT Plus for Priority Access

Subscribing to ChatGPT Plus offers significant benefits, including reduced queue times. According to Anakin AI, Plus users experience 72% fewer delays during outages. This subscription ensures priority access to the model, even during peak hours.

For frequent users, the ROI of ChatGPT Plus is clear. It’s a practical solution for those who rely on the tool for work or creative projects.

Simplify Prompts for Faster Responses

Complex prompts require more processing time. Simplifying your queries can cut response times by up to 40%. For example, instead of asking, “Explain the economic theories of Keynes and Friedman in detail,” try “Summarize Keynesian economics.”

Shorter, focused prompts reduce the complexity of the task, allowing the model to generate answers more efficiently.

Disable VPNs for Direct Connections

Using a VPN can add latency to your connection, slowing down ChatGPT’s performance. Disabling your VPN ensures a direct route to the servers, improving speed.

If you need privacy, consider alternatives like Cloudflare WARP, which offers faster connections compared to traditional VPNs.

Conclusion

Optimizing your experience with AI tools requires understanding the balance between server performance and user habits. Proactive monitoring during peak hours can help identify when delays are most likely. Free-tier users may benefit from scheduling tasks during off-peak times to avoid congestion.

For those seeking consistent speed, upgrading to ChatGPT Plus offers priority access, ensuring smoother interactions. Combining this with tools like Gemini can provide a hybrid solution for critical tasks. Regular browser maintenance, such as clearing cache and cookies, also enhances performance.

Stay informed about upcoming GPT-4 optimizations from OpenAI to leverage the latest advancements. By adopting these strategies, you can master AI tools and maximize their potential in your daily workflow.